U.S. Covid-19 Supercomputing Group Evaluates Year-Long Effort

March 24 2021 - 1:45PM

Dow Jones News

By Sara Castellanos

Researchers working on nearly 100 projects related to Covid-19

around the globe have had free access to some of the world's most

powerful computers in the past year, courtesy of a consortium led

in part by the U.S. government and technology companies.

Members of the Covid-19 High-Performance Computing Consortium on

Tuesday spoke on the progress of their initiative and advocated for

a formal organization that would be in charge of making computing

resources available in the event of future pandemics, hurricanes,

oil spills, wildfires and other natural disasters.

"The consortium is proof we were able to act fast and act

together," said Dario Gil, senior vice president and director of

the research division of International Business Machines Corp., who

helped create the consortium.

Announced in March of last year, the consortium has 43 members,

including IBM, the national laboratories of the Department of

Energy, Amazon.com Inc.'s Amazon Web Services, Microsoft Corp.,

Intel Corp., Alphabet Inc.'s Google Cloud and Nvidia Corp.

Collectively, the group helped researchers world-wide gain

access to more than 600 petaflops of computing capacity, plus more

than 6.8 million compute nodes, such as computer processor chips,

memory and storage components, and over 50,000 graphics-processing

units.

Among the nearly 100 approved projects was one in which

researchers at Utah State University worked with the Texas Advanced

Computing Center, part of the University of Texas at Austin, and

others to model the way virus particles disperse in a room. The

goal was to understand the distribution of Covid-19 virus particles

in an enclosed space.

Researchers from the University of Tennessee, Knoxville worked

with Google and Oak Ridge National Laboratory on another project to

identify multiple already-approved drug compounds that could

inhibit the coronavirus. Two of them are currently in clinical

trials.

Members of the group reviewed more than 190 project proposals

from academia, healthcare organizations and companies world-wide,

approving 98. The projects were chosen based on scientific merit

and need for computing capacity by representatives from the

consortium with backgrounds in areas such as high-performance

computing, biology and epidemiology.

The results of many of these studies were used to inform local

and regional government officials, said John Towns, executive

associate director of engagement at the National Center for

Supercomputing Applications, part of the University of Illinois at

Urbana-Champaign. "A number of these things were being used as

supporting evidence for decision makers," said Mr. Towns, who is

also a member of the consortium's executive committee.

The consortium is still accepting applications for projects.

The group is now advocating for a formal entity called the

National Strategic Computing Reserve to accelerate the pace of

scientific discovery in future times of crisis. The organization

would enable access to software expertise, data and computing

resources that can be used by researchers. Federal officials would

have to enact a law to approve such an organization and grant it

funding.

"Computing and data analysis will play an increasingly important

role in addressing future national emergencies, whether they be

pandemics or other events such as future pandemics, tornadoes,

wildfires or nuclear disasters," said Manish Parashar, director of

the office of advanced cyberinfrastructure at the National Science

Foundation, and a member of the consortium's executive

committee.

Write to Sara Castellanos at sara.castellanos@wsj.com

(END) Dow Jones Newswires

March 24, 2021 14:30 ET (18:30 GMT)

Copyright (c) 2021 Dow Jones & Company, Inc.

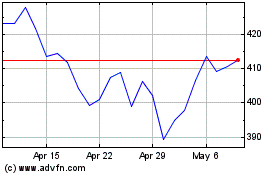

Microsoft (NASDAQ:MSFT)

Historical Stock Chart

From Mar 2024 to Apr 2024

Microsoft (NASDAQ:MSFT)

Historical Stock Chart

From Apr 2023 to Apr 2024