Form PX14A6G - Notice of exempt solicitation submitted by non-management

May 23 2024 - 4:17PM

Edgar (US Regulatory)

May 22, 2024

A message to META investors: Vote FOR on Proposal Eight Regarding

Report on Human Rights Risks in Non-US Markets

Our proposal

requests a report to shareholders on the effectiveness of measures Meta is taking to prevent and mitigate

human rights risks in its five largest non-US markets (based on number of users) relating to the proliferation of hate speech, disinformation,

and incitement to violence enabled by its Instagram and Facebook platforms. The company has responded by noting its policy positions but

also has noted in its 2021 Civil Rights Audit Update that:

Though implementing these 16 recommendations

is important to improving our content moderation policies, needless to say, there is much that still needs to be done and policies are

constantly evolving to meet the needs of our users.1

Our request for a report on the content moderation

performance in the five largest non-US markets asks Meta’s management to provide investors with transparent data on these issues

in markets of immense significance to the growth and success of our company’s platforms. A failure to moderate content effectively

will lead to further regulatory efforts that may curb profits and growth.

We are particularly concerned given that

In just the past few weeks, we have seen evidence of a lack of progress in Meta’s largest market, India, and we are now hearing

from Brazil that disinformation content on Meta platforms is hindering response efforts to the severe flooding in Rio Grande do

Sul.

In April and May a series of investigations published by Ekō

during India’s ongoing election have revealed how Meta has facilitated2 far-right networks reaching millions of Indian

voters with Islamophobic hate ads.

_____________________________

1 https://about.fb.com/wp-content/uploads/2021/11/Metas-Progress-on-Civil-Rights-Audit-Commitments.pdf

2 https://aks3.eko.org/pdf/Slander_Lies_and_Incitement_Report.pdf

The far-right’s utilization of Meta as an incubator of hate speech

has been extensively documented for nearly a decade. The new report provides clear evidence to show how Meta’s “Indian election

preparation”3 statement is already failing due to their piece-meal actions against a tide of systemic issues embedded

in their harmful business model. The report underscores the urgent need for mechanisms to curb the influence of paid advertisements by

shadow actors on social media platforms and safeguard India’s democratic ethos.

Key findings include:

| ● | 1M USD spent by 22 far-right shadow advertisers over 90 days on Meta, accounting for nearly 22% of the

total sum of "issues, elections or politics" advertisements in India across the same time period. |

| ● | 36 ads potentially breaking Indian election laws pushing hate speech, Islamophobia, communal violence, and misinformation amassing

between 65-66M impressions. The ads include: |

| ○ | Islamophobic tropes and disinformation targeting Muslims: depicting them as sexually violent invaders, “instigating communal

riots”, and “patrons of terror” |

| ○ | Hindu supremacist narratives: ads promoting narratives to recreate India into a Hindu nation and claiming “Hindustan is a country

for Hindus” as well as hashtags tied to Hindu supremacist groups |

| ○ | Targeting women lawmakers, journalists, and activists as “anti-India” |

| ○ | Aggressive and violent rhetoric against the BJP opposition: referring to opponents as a "virus" that needs to be eradicated

and that a BJP candidate will "break their [DMK] spine.” As well as insults such as "demon,"

and "poisonous snake" |

| ● | Researchers uncovered a coordinated network led by a public page named Ulta Chashma, which has nine other pages sharing content, hashtags,

and ad payments. This network amassed 10.53M interactions and 34.64M video views within 90 days, with a loophole allowing early political

ad postings, indicating a breach of Meta's ad transparency policy |

| ● | 23.13M interactions on 22 far-right publisher pages over 90 days |

| ● | None of the far-right publishers analyzed in the report responded on the phone numbers provided as disclaimers in Meta’s Ad

library |

This week a new report4 also documents how Meta approved

AI-manipulated political ads seeking to spread disinformation and incite religious violence during India's elections. Between May 8th

and May 13th, Meta approved 14 highly inflammatory ads. These ads called for violent uprisings targeting Muslim minorities, disseminated

blatant disinformation exploiting communal or religious conspiracy theories prevalent in India's political landscape, and incited violence

through Hindu supremacist narratives.

_____________________________

3 https://about.fb.com/news/2024/03/how-meta-is-preparing-for-indian-general-elections-2024/

4 https://aks3.eko.org/pdf/Meta_AI_ads_investigation.pdf

Accompanying each ad text were manipulated images generated by AI image

tools, proving how quickly and easily this new technology can be deployed to amplify harmful content. Meta’s systems did not block

researchers posting political and incendiary ads during the election “silence period”. The process of setting up the Facebook

accounts was extremely simple and researchers were able to post these ads from outside of India. Researchers pulled the ads before they

were actually published.

As we write, Brazil is experiencing devastating

floods. Our proposal comes amidst the rescue response to this historic flooding, which has been significantly impeded by disinformation

and conspiracy theories pushed by the far-right and pro-Bolsonaro allies. The floods have already killed 161 people and displaced over

580,000.

Brazilian fact-checkers5 are working around the clock to

bust the infodemic, such as:

| ● | Allegations that a local government is repackaging public donations with official logos to appear as if they are from federal agencies. |

| ● | Claims that Lula’s government denied donations from certain countries. |

| ● | False claims that “Starlink”, Musk's satellite internet, is the only available internet service in the state; at least

two other operators are fully functional. |

| ● | An old audio clip from 2019 regarding Israeli military rescue support, circulated as if it were current. |

| ● | Inflated numbers of hospital deaths. |

The most damaging narratives discredit federal authorities,

suggesting they are blocking or denying rescue efforts and donations to victims. Other disinformation includes false claims related to

climate change, international celebrities criticizing the government, and the use of unrelated images and videos to exaggerate the tragedy’s

death toll.

In light of these current examples of Meta’s

lack of effective content moderation in two of its largest non-US markets, the proposal’s request for a report to shareholders on

the effectiveness of measures it is taking to prevent and mitigate human rights risks in its five largest non-US markets (based on number

of users) is compelling. As investors, it is essential we have a transparent and accurate view of content moderation capacity and competence

in such key markets.

_____________________________

5 https://www.estadao.com.br/estadao-verifica/fake-news-enchente-chuvas-rio-grande-sul/?j=1268203&sfmc_sub=1001900989&l=9451_HTML&u=36879072&mid=534001280&jb=7011&utm_medium=email&utm_source=salesforce&utm_campaign=sqeng-estadao-verifica-fake-news-rs&utm_term=20240515&utm_content=ler-agora-digital

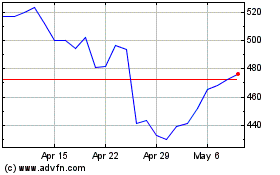

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From May 2024 to Jun 2024

Meta Platforms (NASDAQ:META)

Historical Stock Chart

From Jun 2023 to Jun 2024